replicAnt: a data generator to create annotated images

Deep learning in computer vision is revolutionising the study of animal behaviour. Despite the advantages of transfer learning for non-model species, it relies on manual annotation and works well only in specific conditions. To address these challenges, replicAnt was developed by Fabian Plum, René Bulla, Hendrik K. Beck, Natalie Imirzian, and David Labonte published in Nature Communications entitled “replicAnt: a pipeline for generating annotated images of animals in complex environments using Unreal Engine”. This adaptable pipeline, using Unreal Engine 5 and Python, creates diverse training datasets on standard hardware. By situating 3D animal models in computer-generated settings, replicAnt generates annotated images. It thus significantly reduces manual annotation and enhances performance in various applications like animal detection and tracking. Furthermore, replicAnt boosts the adaptability of trained networks, making them more flexible and robust. In some cases, replicAnt could eliminate the need for manual annotation entirely. Here, first author Fabian Plum shares some pictures and descriptions of replicAnt.

A Photoblog contribution by Fabian Plum

Edited by Roberta Gibson, Patrick Krapf, and Alice Laciny

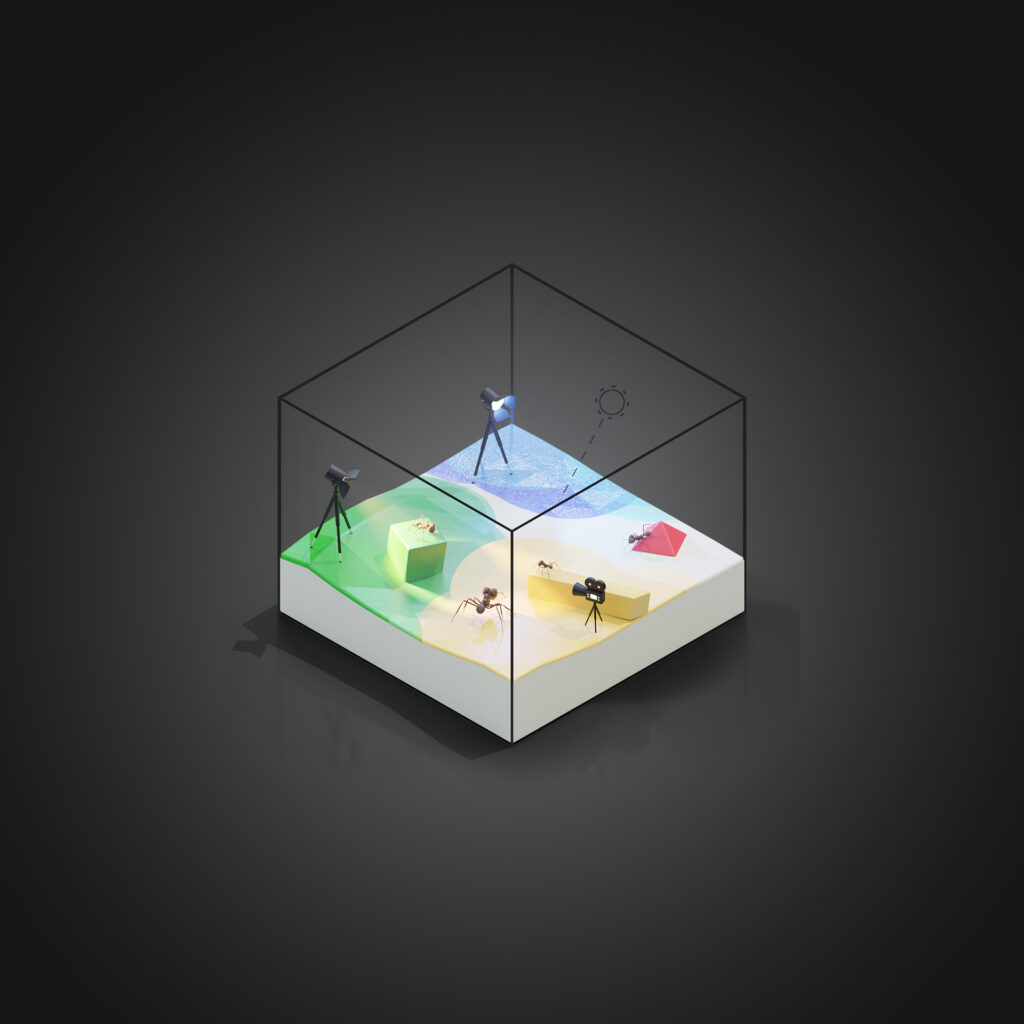

Stylised render of the replicAnt data generator, adapted from the original first figure in the paper. 3D scanned animals, digitised with scAnt (our open-source macro 3D scanner https://peerj.com/articles/11155/), are placed into randomly generated worlds. These worlds consist of a large ground plane with randomised hills and valleys, which are populated with thousands of 3D models of common household objects, plants, and rocks. The automated data generator is built within Unreal Engine 5, a powerful 3D software commonly used in video game and film productions.

Demo animation of the replicAnt pipeline, showing how complex synthetically generated data can be produced using 3D animal models, to which the user assigns virtual joints and bones. Data can then be used to train a variety of computer vision tools, to automatically locate the animal in question as well as key body landmarks without the need to attach markers or physical tracking devices to the studied specimens. This allows for non-intrusive analysis of freely moving animals under natural conditions with minimal manual effort.

Image examples generated samples for a digital population of leafcutter ants (Atta vollenweideri), containing scanned specimens covering the typical worker size-range between 1 and 50 mg.

Example of a “rigged” 3D model of a Dinomyrmex gigas specimen, where user-defined joints are used to articulate the digital version of the scanned animal.

Pose-estimation example for freely moving Atta vollenweideri. The underlying deep neural network (DeepLabCut with a ResNet152 backbone) has been trained on a 10:1 mix of synthetically generated and hand-annotated data.

A short demo illustrating the versatility of the use of synthetic data in predicting the location of key body landmarks without the attachment of physical markers on the animal. The video shows the pose-estimation performance of a deep neural network (DeepLabCut with a ResNet101 backbone) trained first exclusively on synthetically generated data of a Sungaya inexpectata specimen, and then transitions to a key point overlay of the same neural network when refined on a handful of hand-annotated samples. The network shown has been trained on 10,000 synthetically generated images and refined on 50 hand-annotated samples, 5 from each shown video.

OmniTrax, an in-house developed multi-animal tracking and pose-estimation Blender Addon (https://github.com/FabianPlum/OmniTrax), can interface directly with various detection and pose-estimation networks to make it easy for users to automatically track and annotate large video files with hundreds of freely moving animals. In this demo video, we load detector networks (YOLOv4) trained exclusively on synthetic data generated by replicAnt into OmniTrax and show how we can robustly track animals under challenging laboratory as well as field recording conditions. OmniTrax allows users to easily make manual changes to and clean up the tracking results where required, to facilitate the annotation and evaluation of large video datasets.

Example lighting simulation in replicAnt, showing a 3D scanned Leptoglossus zonatus specimen.

Recent Comments